Shortly before the holidays I made donations to three organizations: the Free Software Foundation, Wikipedia, and Creative Commons. If you'll kindly indulge me for a minute, I'll explain why I think the work of these organizations is so important to an open internet and a free and properous society. Consider giving whatever you can spare (whenever you can spare it) to one of these groups.

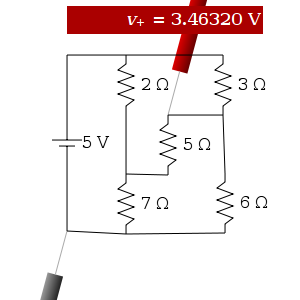

1. So much of our everyday lives—both work and play—depends on the operation of software that we cannot really claim to be free people unless we are using free software. I'm donating to the Free Software Foundation both as thanks for the GNU operating system and in support of their campaigns. The GNU OS—from ls to emacs, and everything in between, and beyond—eclipses, in terms of power and productivity, pretty much any other OS you can buy. But peace of mind is even more valuable than technical power. As someone whose livelihood directly depends on software, it would be foolish in the extreme for me to compromise my autonomy and financial security by using proprietary software and "giving the keys to someone else."

The FSF also promotes awareness of a number of threats to freedom and innovation, like DRM (vile, vile stuff) and proprietary document formats (which are antithetical to the democratic idea of the free interchange of information).

2. I'd say that being able to learn about anything, anytime, on Wikipedia has been a pretty life-changing experience. I don't need to explain what this is like to most of you. But Wikipedia isn't valuable just because it satisfies my idle curiosities. One of the hats I wear is that of "teacher," and I love sharing knowledge. The dissemination of knowledge is one of the surest ways to produce prosperity. I'm donating to Wikipedia on behalf of children and other curious people everywhere.

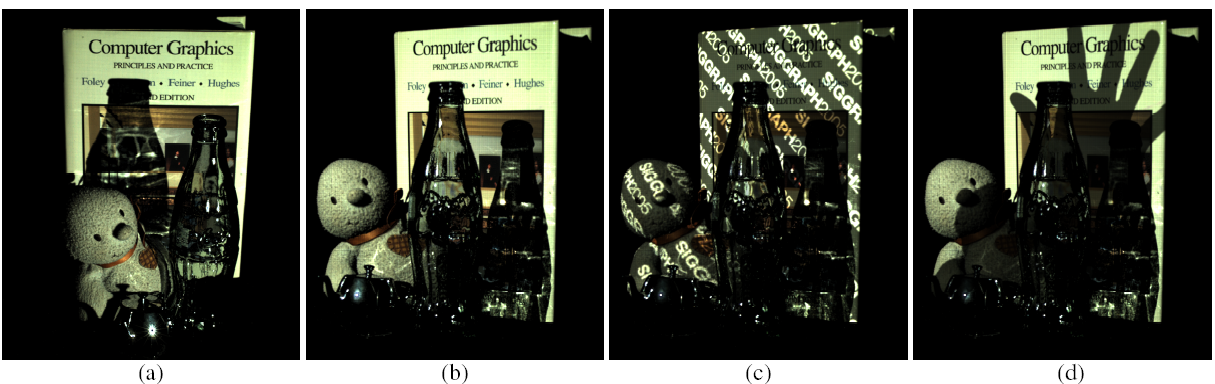

3. Creative Commons is creating a body of actually useful creative works, as well as encouraging people to rethink copyright law. I feel like I've discovered a gem every time I find an ebook I can copy for offline reading, or music I can share with friends, or a comic or photo that I can put on my blog. What is perhaps more valuable is that CC is planting the idea in people's heads that maybe we can be more prosperous as a society if authors allow their work to be used in more ways rather than fewer.