This is a summary of the technique in the paper Dual Photography, which was presented at SIGGRAPH 2005. What the authors managed to do is very clever, and you can even understand the technique without knowing much high-brow math. Color me impressed.

Imagine you are photographing a scene which contains objects lit by a lamp. Suppose the light source is replaced with a structured light source, basically a projector. We could turn all the pixels of the projector on and get what is essentially a lamp. But in general we could light just some of the projector pixels, and illuminate the scene with whatever weirdly shaped light we want.

Fact: light transport is linear. So the relationship between the input vector p (which pixels on the projector are lit) and the output vector c (the brightness on each camera pixel) can be described by a matrix multiplication: c = T p. The ijth element of the matrix T is the brightness of the ith camera pixel when the jth projector pixel (and nothing else) is lit with intensity 1. (If the camera and the light source both have resolution of, say, 103×103, then p and c both have length 106, and T is a 106×106 matrix.)

We can actually construct the matrix T by lighting the first pixel, taking a picture, lighting the second pixel, taking a picture, etc. (each picture yields one column of the matrix T). And once we've done this, we can plug in any vector p we want to see what our scene would look like with arbitrary illumination from the projector. So, once we have this matrix T which completely characterizes the response of this scene to lights, we can change the lighting of the scene in post-processing.

That's already kind of neat, but the most impressive trick here is based on the fact that light transport follows the principle of reciprocity. If light of magnitude 1 enters a scene from a projector pixel a and lights up camera pixel b with intensity α, then a ray of light could just as well have entered at b and exited at a, and that light will also be passed with the same coefficient α. This is easily seen if the scene contains mirrors, but it's actually true in general, even if light is partially absorbed, reflected in funny ways on the scene, etc.

Now, what happens if we swap the locations of the projector and the camera in our scene? What's the matrix T' associated with this new scene? Because of reciprocity, it's merely the transpose of the matrix T: all the coefficients are the same, but they move around because we've swapped the 'input' and the 'output' of our system.

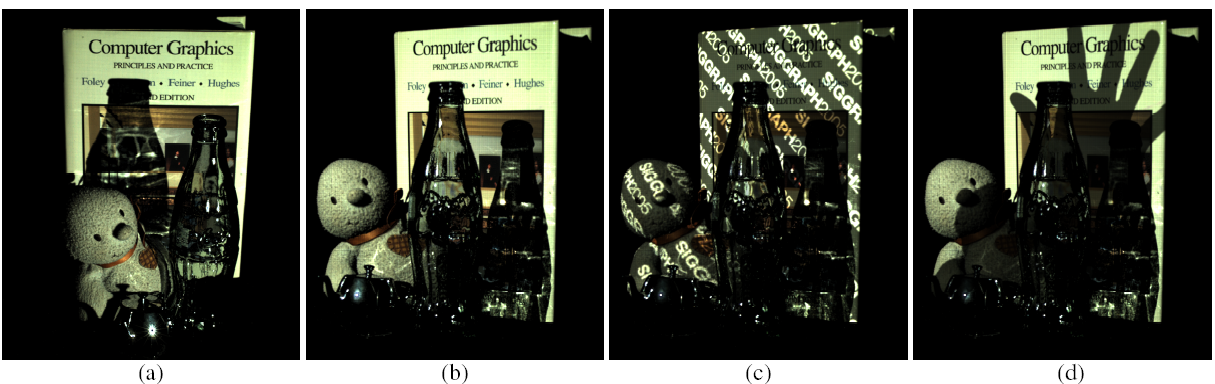

You'll notice something funny about this, which is that using T', we can reconstruct the scene as if it were viewed by a camera where the projector was, and lit by a projector where the camera was. The authors show that this actually works, and describe some tricks they played to get it working well. See the pictures below: the second, third, and fourth pictures can all be synthesized from the matrix T, despite the fact that the scene was never photographed from that angle.

The authors then use this technique to (I am not making this up) read the back of a playing card. Watch the video (linked from the web page), then read the paper.

The approach works no matter what the resolutions of the camera and projector are. You can even take a picture using a projector and a photodiode (i.e. a single element light sensor), because the effective resolution of the virtual camera is the resolution of the projector.