The search/replace mechanism in Emacs is a beautiful thing, full of subtleties.

It is pretty clear that its design has been honed to an edge by about 20 years of heavy use. You might not think there could be much to it, but a lot of apps that have come along since then could learn something from some of its features:

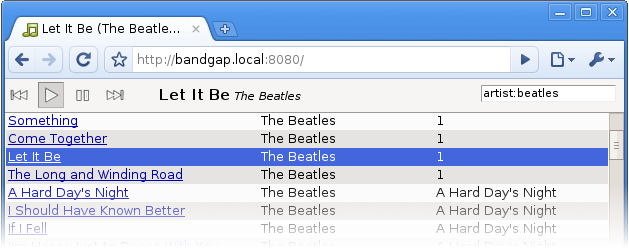

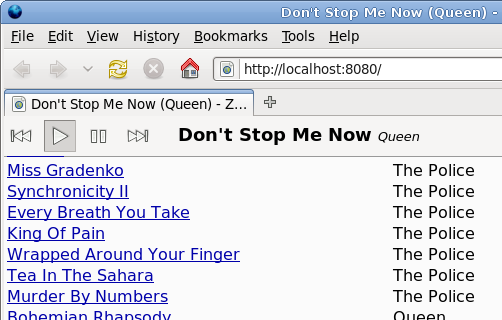

Incremental search. (isearch, or "find as you type") This has gained traction elsewhere in recent years. To search, press C-s and start typing; C-s again takes you to successive matches. Emacs highlights all the matches that are visible:

You can save quite a bit of typing this way because you can stop as soon as you've typed a prefix that uniquely identifies the text you're looking for.

In addition, the search UI is in the minibuffer, instead of in a separate dialog that covers part of your document.

Firefox, Chrome, and gedit all have fairly similar features; however, they only expose bare-bones search functionality through them. OpenOffice and most word processors bring up a big and jarring dialog.

Yanking from the document. This is like auto-completion for your searches. Suppose you're looking for all instances of "tag.artist" in your document. Press C-s and start typing...

Now you notice that the word following the highlighted match is exactly what you were looking for. Just press C-w to pull the next word into your query:

Then pressing C-s searches for subsequent occurrences of tag.artist. If you think this is useful, just think about how awesome it is when you need to search for superLongIdentifierNames in Java code.

You can also yank just the next character from the document, or everything up to the end of the line. Type C-h k C-s in Emacs to learn more.

Case replacement. If you have the text

Eat foo for breakfast! Foo is the best medicine. VOTE FOO FOR PRESIDENT

and replace (M-x query-replace or M-%) "foo" with "bar", what do you get? Exactly what you expect:

Eat bar for breakfast! Bar is the best medicine. VOTE BAR FOR PRESIDENT

This is clearly what you want most of the time, at least when you're editing prose. Gedit and OpenOffice don't do this (and Chrome and Firefox don't have a "replace" feature).

(What happens, you ask, if you're editing code, or anything else where case sensitivity is critical? Emacs's heuristic is to only turn on these smart replacements when your query and replacement text are both all lowercase. You can also manually toggle case-sensitivity in searching with M-c. For what it's worth, I've never noticed a false positive when editing code.)

Cycling behavior. Many programs do a poor job of letting you know when your search has wrapped around to the beginning of the document. In Firefox, I often find that I can inadvertently cycle through looking at all the search results two or three times before I realize what I've done.

After the last match in a document, Gedit and Chrome wrap around to the beginning silently. Firefox wraps around and concurrently displays a notification in the search UI. The problem is that if your attention is focused on the document content—as it probably is if you're scanning the individual matches—it's easy to miss the cues that tell you the search has wrapped around (e.g. Firefox's notification, or the scroll bar jumping back). You can end up back at the beginning of the document without realizing it.

At the other end of the spectrum, OpenOffice brings up a big and jarring dialog asking you "Yes/No" whether you want to continue searching at the beginning. Not so great, either.

How does Emacs deal with this? When you've reached the last match for a query in the buffer, pressing C-s does nothing, except raising a quiet notification that there are no remaining matches:

If you press C-s again, however, the search wraps back around to the beginning of the buffer.

That is, at the last match, C-s is not initially met with an immediate jump to a new match (as it usually is); Emacs gives just enough "pushback" to ensure that the user is aware when the search has wrapped, even if they're not paying any attention to the application chrome (minibuffer and scroll bars)! Very cool.

Displaying failed searches. When you type something that doesn't have a match, Emacs brings you to the maximal matching prefix of whatever you typed, and highlights the part of your query that wasn't found:

This kind of feedback gives you a good idea of whether or not you made a typo/error in your search: if you did, it often appears near the point where the text stopped matching.

If you only get all-or-nothing feedback telling you "no matches were found," then you are sort of groping around in the dark as you try to figure out what went wrong.

Search as a method for navigation. Perhaps the killer feature of isearch in Emacs is that you can use it as the primary method for moving around in a document, essentially replacing most of the functions of the PgUp/PgDn keys and the scroll bar in other applications.

Part of this has to do with the fact that the search UI is so lightweight (no dialog boxes!). However, isearch in Emacs is also cool because it facilitates many use cases:

- During a search, you can press C-g to cancel and return to where you started. This is handy if you just wanted to search for something in order to get a quick glance at it.

- …However, if you do indeed want to stop at the current match, press RET. Perhaps you want to make some quick edits or do some more extensive reading at that spot. But when you're done with that, you can press C-x C-x to return to where you originally were, because Emacs has set the mark to the place where you started.

- …Or, you can just continue editing there with no intention of returning to your original position.

Gedit and OpenOffice really only support that very last use case. You can hold your existing spot in a document with the cursor, but then you're limited to looking around with the scrollbars, which is cumbersome. Or you can use the Find feature or otherwise move the cursor, but then you've lost your original position.

Emacs is like your very own personal assistant: it remembers things so you don't have to.

Conclusion

I haven't mentioned many of the miscellaneous keyboard-accessible commands and options available from within isearch and query-replace. These are well worth your time to learn, although they are quite self-explanatory if you read the integrated help:

- For options available in isearch, type C-h k C-s.

- For options available in query-replace, type C-h at a query-replace prompt.

I'd sum up some of the more generalizable design principles behind search-and-replace in Emacs as follows:

- Reduce extraneous typing.

- Stay out of the user's way whenever possible.

- However, alert the user with immediate and useful feedback whenever necessary.